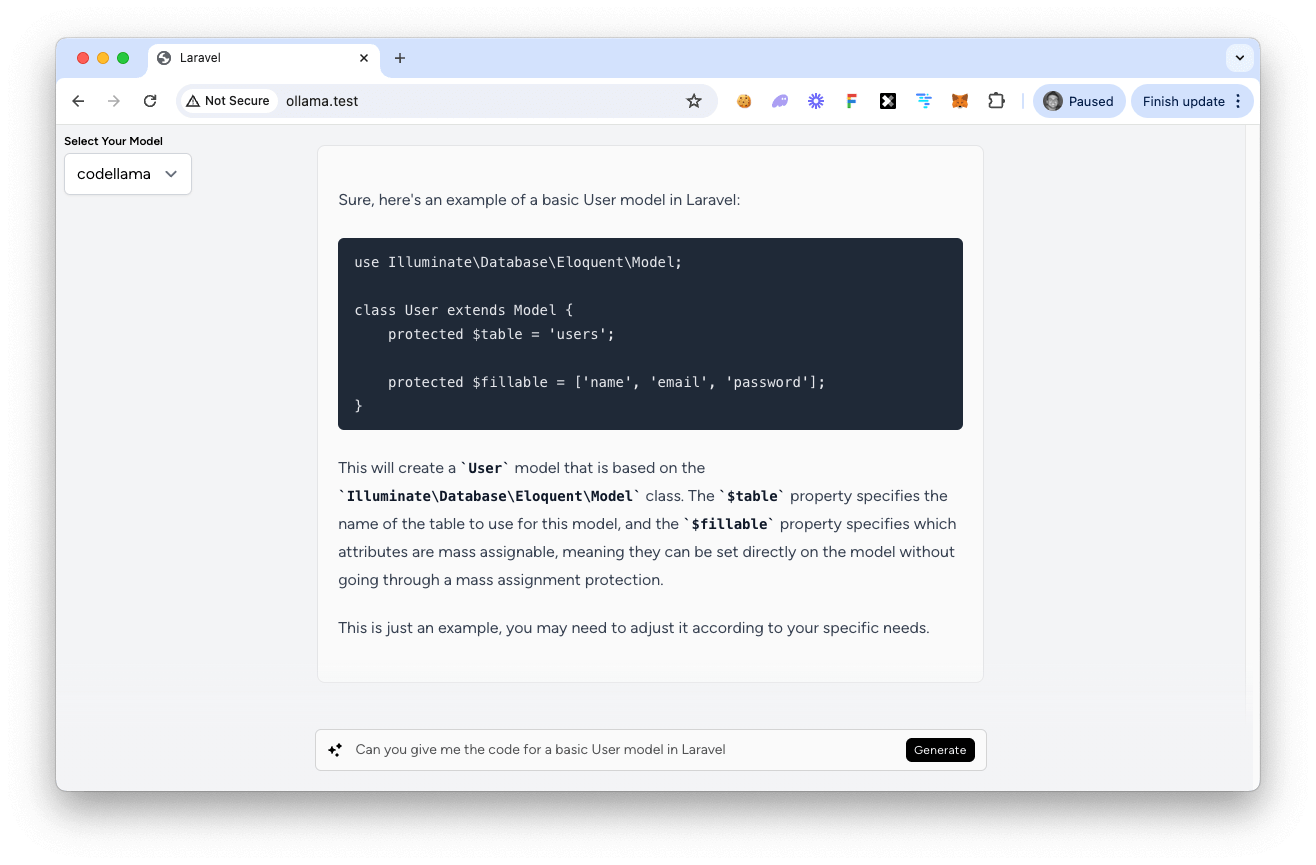

This app uses Laravel, Livewire, and Volt to create a simple interface that generates a response from an AI model using Ollama.

Simply download and install Ollama. Then use it with any model, like so:

ollama pull codellama

This application will retreive the response in Laravel by hitting the following endpoint, which is available via Ollama:

curl -X POST http://localhost:11434/api/generate -d '{

"model": "codellama",

"prompt": "Write me a function that outputs the fibonacci sequence"

}'

For testing purposes you may also use the CLI to get a response:

ollama run codellama "Write me a function that outputs the fibonacci sequence"